GPC 2024

Welcome to the 2024 archive! GPC 2024 took place in Breda, Netherlands, from November 12th, 2024 to November 14th, 2024 (with master classes on Monday the 11th.)

2024 sponsors

-

Keynote

Mission: Importable - Ghost of Tsushima PC Postmortem

Bringing the beautiful island of Tsushima to the PC platform has been a significant technological undertaking. The single platform Sucker Punch engine was made to run on the PC for the first time. A custom DirectX 12 render backend had to be built from scratch to accomplish this. From the first triangle to the final post effect. How do you make a to-the-metal console engine run on PC? Yana Mateeva and Marco Bouterse will recount their journey and elaborate on the biggest challenges they faced and how they overcame them.

-

Marco BoutersePrincipal Graphics Programmer, Nixxes Software

Marco BoutersePrincipal Graphics Programmer, Nixxes Software -

Yana MateevaGraphics Programmer, Nixxes Software

Yana MateevaGraphics Programmer, Nixxes Software

Related links: -

-

Keynote

The road to Baldur's Gate 3

Baldur’s Gate 3 shipped with the fourth iteration of our custom in-house engine. This presentation will give a high level overview of the things that were done by the graphics team at Larian Studios, to achieve the rendering goals we had for our latest title. Compared to our previous project, we wanted to support larger and denser worlds, with more distant views and larger vistas. We wanted a new cinematics system that had to work in every lighting setting and environment, which also needed higher fidelity characters and environments. Everything still had to support split screen and now also run on the more modern APIs such as Vulkan and DirectX 12. I will highlight the most significant changes we made on the technical side, and some of the improvements we did to shading, lighting, and other parts of our rendering pipeline. I will also cover some of our gameplay related rendering features in a bit more detail and explain how we render our surfaces and clouds, and how we handle fading and see through effects in our deferred renderer.

-

Wannes VanderstappenSenior Graphics Programmer, Larian Studios

Wannes VanderstappenSenior Graphics Programmer, Larian Studios

Related links: -

-

Keynote

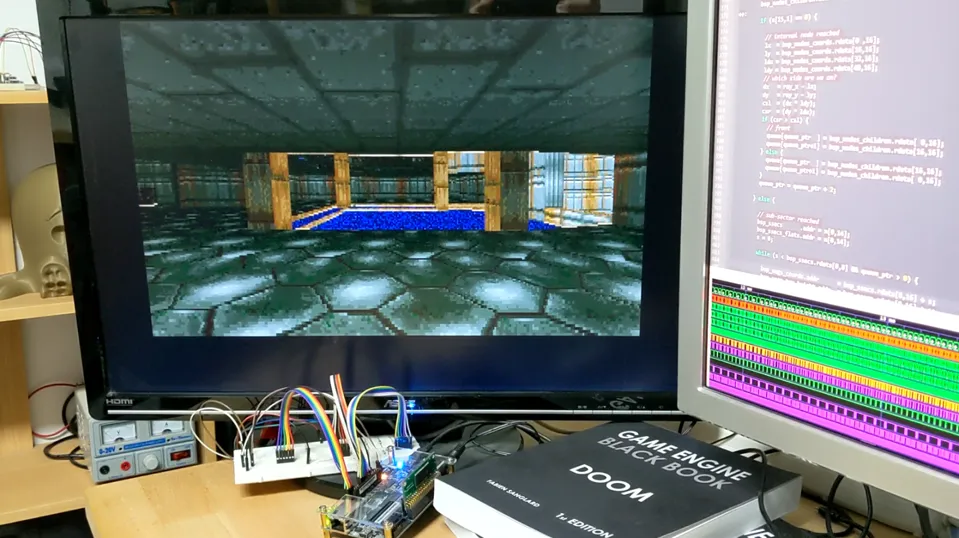

From gates to pixels: making your own graphics hardware

In this talk I will discuss how using recent open source tooling you can design your own hardware, from FPGAs to ASICs — your own piece of silicon! — to implement your favorite graphics algorithms as pure wired logic. From making a GPU with a 90’s twist to creating your own computer from scratch, this opens incredible new possibilities.

-

Sylvain LefebvreResearcher, INRIA

Sylvain LefebvreResearcher, INRIA

Related links: -

-

DirectX 12 Memory Management at Nixxes

Discover the challenges and successes Nixxes faced in developing an optimal memory management strategy adaptable to a wide range of hardware. Learn how we manage video memory oversubscription by automatically transferring low-priority resources to and from system memory, and how we utilize multi-threading to ensure a seamless experience. Explore the statistics we monitor and how we use them to identify and address memory-related issues such as waste and stutters. Finally, understand how we use specialized allocators to address and improve these issues and learn about a few of our other strategies.

-

Hilze VonckSenior Graphics Programmer, Nixxes Software

Hilze VonckSenior Graphics Programmer, Nixxes Software

Related links: -

-

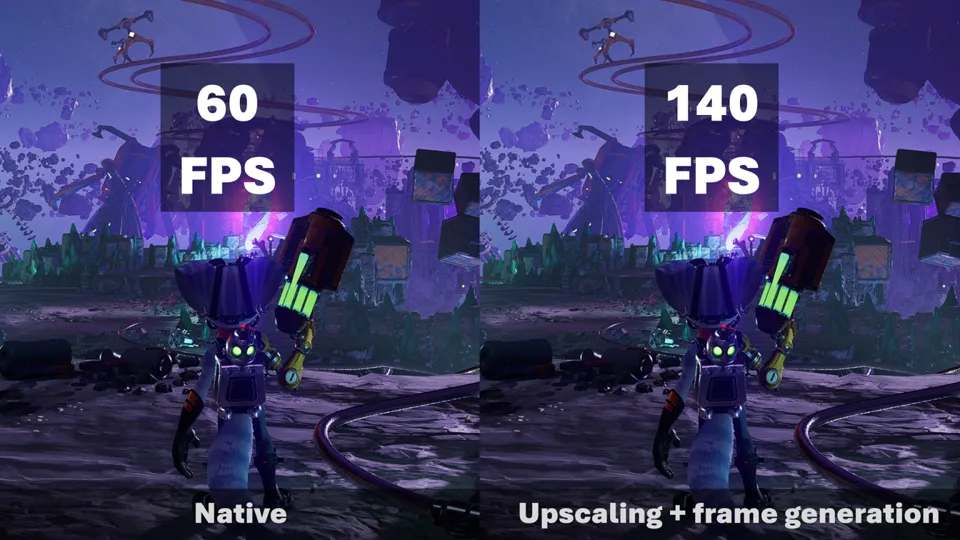

Magic Pixels: An introduction to frame generation and upscaling

The last few years a lot of exciting new techniques have popped up to improve performance of games by a lot! This talk is an easy introduction to the current state of the art frame generation and upscaling methods with a focus on AMD’s recently launched FSR 3.1. How do these almost magical methods generate pixels and even entire frames? What is the tradeoff by using these techniques, and what have been the biggest pitfalls for us into getting these methods ship ready?

-

Menno BilJunior Graphics Programmer, Nixxes Software

Menno BilJunior Graphics Programmer, Nixxes Software

Related links: -

-

Pondering Orbs: The Rendering and Art Tools of "COCOON"

Rendering the game’s beautiful worlds within worlds takes a few tricks – from thick billowing froxel fog and spherical harmonics volumetric lightmaps, to a host of visual effects like crystalline Voronoi bridges, SDF shoreline ripples on water, and warping through ponderable orbs. Mikkel Svendsen presents how these features, tools and effects were implemented and shipped on the game’s target platforms, how they contributed to the game’s look, and how it all works together with an MSAA-friendly rendering pipeline by carefully considering the gotchas of fitting these features and effects with that.

-

Mikkel SvendsenRender Programmer, Geometric Interactive

Mikkel SvendsenRender Programmer, Geometric Interactive

Related links: -

-

Boon or Curse: A custom rendering engine for the development of Hades and Hades II

Explore the pivotal role of Supergiant Games’s rendering engine in shaping the immersive visuals of their latest titles. Dive into the triumphs and tribulations faced by the small but passionate team as they navigate the complexities of developing and refining their technology. From unlocking the art team’s creative potential to wrestling with technical constraints. Devansh presents how creating a fully custom rendering pipeline can prove to be a blessing in the pursuit of crafting unforgettable gaming experiences.

-

Devansh MaheshwariGraphics Software Engineer, Supergiant Games

Devansh MaheshwariGraphics Software Engineer, Supergiant Games

Related links: -

-

Occupancy explained through the AMD RDNA™ architecture

Learn about the AMD RDNA™ graphics hardware architecture and its execution model, and discover how the concept of occupancy naturally emerges from how it works. Understand the critical importance of latency hiding for performance and the hardware mechanisms that enable it on the GPU. Join François in investigating practical examples with the AMD Radeon™ Developer Tool Suite where he explains why occupancy is a useful metric and when you should care about it. He will also provide tips and tricks from the trenches on how to improve the performance of latency-bound workloads.

-

François GuthmannSenior Developer Technology Engineer, Advanced Micro Devices (AMD)

François GuthmannSenior Developer Technology Engineer, Advanced Micro Devices (AMD)

Related links: -

-

Adaptive Refresh Rates on Android

A deep dive into how Android Framework supports variable refresh rates, from 1Hz to 120Hz, and how it blends the refresh rates of dynamic content on the screen.

-

Ramnivas IndaniSoftware Engineer, Google Inc.

Ramnivas IndaniSoftware Engineer, Google Inc.

Related links: -

-

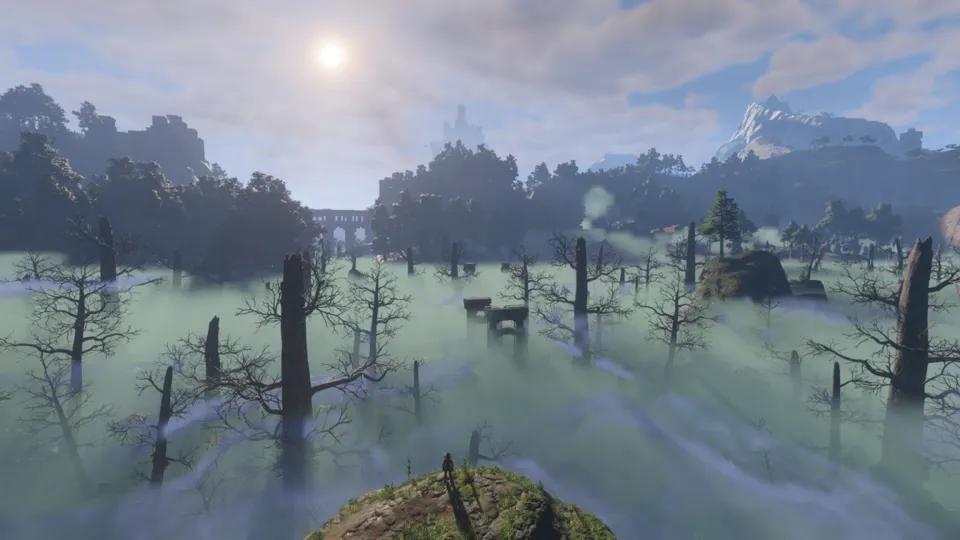

Volumetric Fog in Enshrouded

Volumetric fog is a core aspect of our game, Enshrouded, and has been since the beginning of the project. We’ll skip the basics of rendering volumetrics and instead give a short overview of the various approaches we tried throughout the development of the game to render dynamic volumetric effects over a large distance in our custom in-house engine. The main focus will be how we render fog in the shipping version. We’ll also talk about how we’re supporting dynamic fog effects and some tricks that we’re doing utilizing the voxel-representation of our world.

-

Lukas FellerGraphics Programmer, Keen Games

Lukas FellerGraphics Programmer, Keen Games

Related links: -

-

Dynamic Diffuse & Specular Global Illumination in Enshrouded

Enshrouded has a voxel based environment, where nearly everything can be destroyed or built from scratch, so there is no room for pre-baked lighting. This presentation takes you on a journey through the development process of Enshrouded GI and shows which techniques worked for us or which didn’t. Discover how we moved to our own SDF rays from Vulkan Raytracing to run on a wide range of GPUs. From shiny specular armor reflections, to diffuse foggy forests or deep dark caves, Enshrouded GI is capable of handling various situations dynamically and in real time. All rounded up with a bit of stochastic to make everything fast and smooth. Enshrouded GI shows what we achieved in a small team with our own handcrafted voxel engine.

-

Jakub KolesikSenior Rendering Engineer, Keen Games

Jakub KolesikSenior Rendering Engineer, Keen Games

Related links: -

-

Vulkan in Enshrouded

Shipping Eshrouded on PC with just a Vulkan backend has been a lot of work and we learned a lot along the way. This presentation will discuss the good, the bad and the ugly of Vulkan from the perspective of a small indie studio shipping an ambitious game like Enshrouded using our custom Vulkan engine. We’ll share with you the details on how we evolved our internal graphics api, how we managed memory, what we did about synchronization and pipeline compilation, and what issues we had along the way.

-

Julien KoenenTechnical Director, Keen Games

Julien KoenenTechnical Director, Keen Games -

Lukas FellerGraphics Programmer, Keen Games

Lukas FellerGraphics Programmer, Keen Games

Related links: -

-

Rendering tiny glades with entirely too much ray marching

Learn how Tiny Glade’s custom engine draws lush meadows, fluffy trees, and player-created castles and towns. We’ll explore a range of bespoke techniques employed in the game, from real-time global illumination to tilt-shift DoF and stable shadows with continuous time of day. All running on 10 year old hardware, laced with excessive amounts of ray marching, GPU-driven rendering, and just the right sprinkle of ray tracing.

-

Tomasz StachowiakTechnical Debt Generator, Pounce Light

Tomasz StachowiakTechnical Debt Generator, Pounce Light

Related links: -

-

Harnessing Wave Intrinsics For Good (And Evil)

Optimizing shaders using wave intrinsics has been possible for many years and can offer significant performance benefits. Whether you’re looking to compact data, run parallel reductions, or reduce register pressure through scalarization, wave intrinsics are invaluable tools. Alex will delve into alternative uses of wave intrinsics that range from useful to mere curiosities, highlighting how to discover and develop your own tricks and techniques. You too can build a beautiful shader that will send you straight to complexity jail.

-

Alexandre SabourinGraphics Lead, Snowed In Studios

Alexandre SabourinGraphics Lead, Snowed In Studios

Related links: -

-

Arm Accuracy Super Resolution: Bringing efficient spatio-temporal Super Resolution to mobile

Super-Resolution has become an extremely popular topic on mobile in the past year. However, advanced techniques in this area were originally designed and optimized with Desktops and Consoles in mind, leaving the mobile space out of the equation. This was a big limitation to developers who, in order to benefit from the performance and bandwidth savings upscaling workflows provide, found themselves using spatial upscalers that generally gave poor quality and were bound to use a really conservative per-dimension upscaling ratio. Arm-ASR is our technique derived from AMD’s “Fidelity Super Resolution 2” that has been extremely optimized for mobile. In this talk we will provide an overview of the journey we did to bring this usecase to mobile.

-

Sergio AlapontStaff Software Engineer, Arm

Sergio AlapontStaff Software Engineer, Arm -

Panagiotis Christopoulos CharitosPrincipal Software Engineer, Arm

Panagiotis Christopoulos CharitosPrincipal Software Engineer, Arm

Related links: -

-

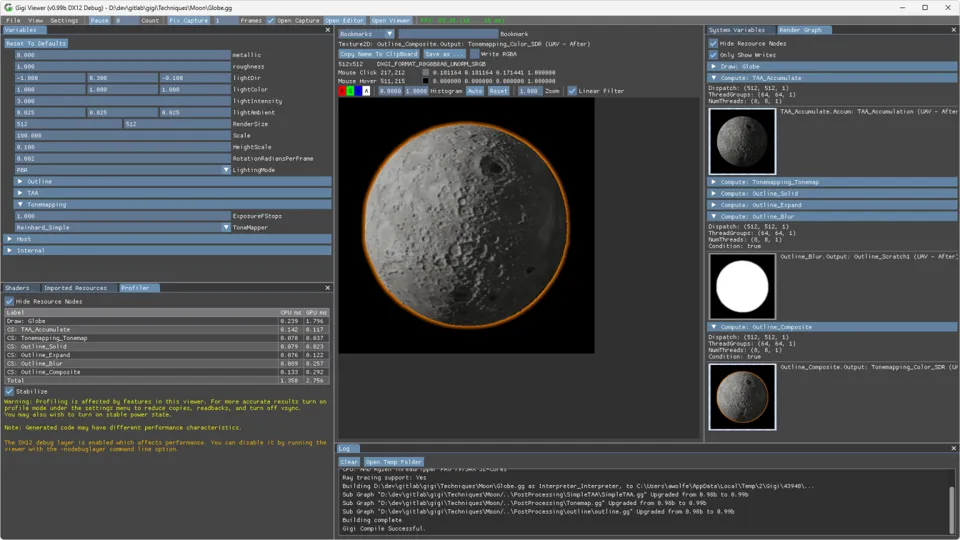

Gigi: A Platform for Rapid Graphics Development and Code Generation

Graphics programming has never been more difficult or time consuming than it is today. Working at the lowest level, modern explicit APIs like DX12 and Vulkan can take several weeks of effort before seeing the first triangle. Working at a higher level, we have fully featured engines which have long compile times, steep learning curves, and can be difficult to make isolated work in to help be sure of results. These challenges make it harder for newcomers to learn graphics, make it take longer to get things done, and also make iteration take longer. Porting rendering work between APIs and engines is painful as well, with shaders often porting fairly well, but the CPU side work often being the main problem. Gigi addresses these problems by allowing rendering techniques to be described in the Gigi editor concisely, but completely, working at a coarser abstraction layer than the typical HAL. The Gigi viewer allows viewing, profiling, and debugging of the technique in real time, and is scriptable with Python to automate tasks such as automated tests or laborious data gathering. The Gigi compiler then generates code for the technique towards a chosen target, making code that is well formatted, well commented, has friendly variable names, and should pass a code review. At EA, we have used Gigi to aid prototyping and development of rendering techniques, we have used Gigi in published research to do the experiments as well as gather images for diagrams, and data for graphs, and we have used Gigi to generate code that is released to the public. Gigi is open sourced and available for use, with the viewer, editor, and dx12 code generator available to the public. We hope to include other APIs and engines in the public repo as development continues.

-

Alan WolfeApplied Graphics Research, EA SEED

Alan WolfeApplied Graphics Research, EA SEED

Related links: -

-

Intro to GPU Occlusion

For games that heavily feature dynamic or user-generated levels, classic optimization techniques like static occlusion hierarchies are less viable due to their inflexibility. Runtime-generated acceleration structures introduce their own fair share of CPU and memory overhead. In this talk, Leon will cover an adaptation of GPU-based occlusion culling using a hierarchical z-buffer, which achieved significant performance improvements with minimal computation time.

-

Leon BrandsGraphics Programmer, Behaviour Interactive

Leon BrandsGraphics Programmer, Behaviour Interactive

Related links: -

-

Solving Numerical Precision Challenges for Large Worlds in Unreal Engine 5

Unreal Engine 5 expands the worldsize from a 22 km radius to 88 million kilometers. This presentation explores the evolution of our approach to supporting these large-scale open worlds on GPU. It delves into the complexities of maintaining both numerical precision and performance in GPU computations, both fixed-function and artist-driven. We’ll share the engineering hurdles encountered in Unreal Engine 5.4 and our hybrid solution that combines the strengths of various methods.

-

Wouter De KeersmaeckerRendering Programmer, Epic Games

Wouter De KeersmaeckerRendering Programmer, Epic Games

Related links: -

-

Forging our own path: NPR Light Rendering in Unreal Engine

Telltale’s games have a legacy of unique visual styles which put unique pressures on our renderer. As Telltale transitioned our development away from our internal engine, to Unreal Engine, we encountered challenges in how to stay faithful to our legacy in an engine we no longer owned. In tackling these challenges, we built TTRender, a new plugin based lighting renderer integrated into UnrealEngine.

-

Indy RayLead Engineer, Telltale Games

Indy RayLead Engineer, Telltale Games

Related links: -

-

Raytracing in Snowdrop

Snowdrop is one of Ubisoft’s internal game engines, powering games such as “The Division”, “Avatar: Frontiers of Pandora”, and more recently “Star Wars: Outlaws”. Raytracing made its debut into the engine with “Avatar: Frontiers of Pandora”, which included raytraced global illumination, raytraced reflections, raytraced distant shadows, and raytraced audio. In addition to those, “Star Wars: Outlaws” also included raytraced direct lighting. This talk will go over the raytracing pipeline of the Snowdrop engine, and cover the different optimizations needed to allow all of those raytracing effects to be enabled on all consoles as well as PC.

-

Quentin KuenlinAssociate Lead Rendering Programmer, Massive Entertainment

Quentin KuenlinAssociate Lead Rendering Programmer, Massive Entertainment

-

-

Neural Networks in the Rendering Loop

At Traverse Research we’ve developed a cross-platform GPU-driven neural network crate (yes we develop in Rust!) in our breda engine. This talk explores the breda-nn framework, which enables seamless integration of neural networks within the render loop via our render graph system. We’ll discuss our strategy for in-the-loop training and inference, showcase practical applications in neural materials and neural radiance caching, and demonstrate how these techniques integrate with our renderer.

-

Luca QuartesanHead of Machine Learning, Traverse Research

Luca QuartesanHead of Machine Learning, Traverse Research

Related links: -